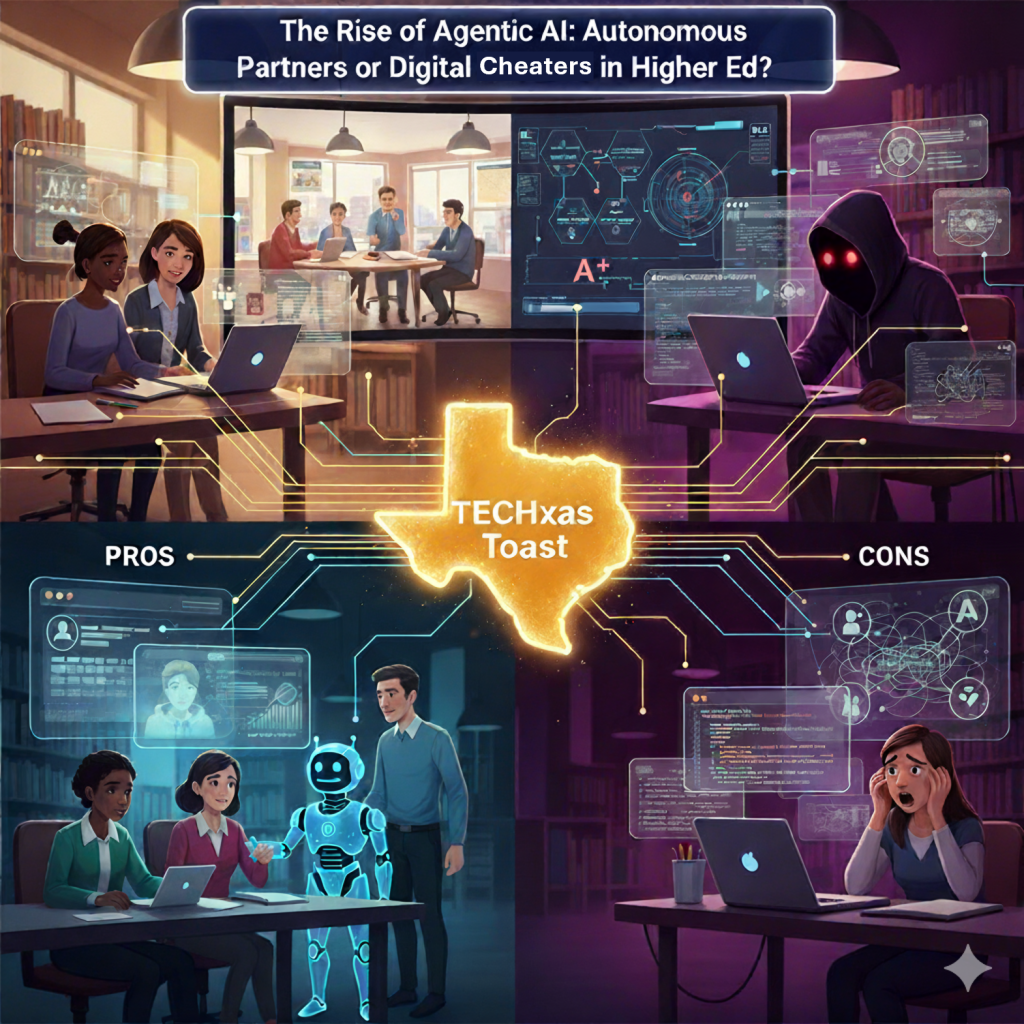

Everyone is talking about AI video like Sora, but the real game-changer in higher education isn’t the ability of AI to create content (Generative AI). It’s the ability of AI to take autonomous, multi-step action—a field known as Agentic AI.

An AI Agent is a system designed not just to respond to a prompt, but to pursue a complex goal, plan the steps, execute those steps using various tools (like a search engine or a data system), and adapt its strategy based on the results. This is the difference between asking ChatGPT to write a report (GenAI) and instructing an AI agent to research the impact of climate change on Texas water policy, draft a report, cross-reference it with the latest legislative bills, and schedule a faculty presentation (Agentic AI).

This new level of autonomy presents both the most exciting opportunities for innovation and the most alarming risks to academic integrity.

The “Pros”: Autonomous Efficiency and Personalized Pathways

AI agents can revolutionize the student and institutional experience by taking on complex, time-consuming processes.

- Proactive Student Success Coaches: Imagine an agent that continuously monitors a student’s progress (attendance, quiz scores, LMS engagement). If it detects an early warning sign—a sudden drop in a core course—it doesn’t wait for a human. It autonomously schedules a tutoring appointment, sends a personalized course-correction plan, and alerts the academic advisor, all in real-time. This is proactive intervention at scale.

- Automated Research & Analysis: For faculty and graduate students, an agent can be tasked with “Synthesize all peer-reviewed literature on deep learning ethics from the last two years and generate a taxonomy of key ethical risks.” The agent will plan the search, execute queries across multiple databases, summarize, categorize, and compile the final document—a multi-day task completed in minutes.

- Scaled Administrative Orchestration: Agents can manage complex logistical goals, such as “Allocate classroom space and resources for all Spring 2026 courses while minimizing room-change disruptions.” The agent will interact with scheduling software, resource management systems, and faculty calendars to autonomously resolve conflicts and optimize the entire institutional timetable.

Free & Accessible Agentic Tools for Educators

While full institutional agent systems can be costly, faculty can explore the power of agentic workflows with these accessible tools:

| Tool | Focus | Agentic Capability |

| Khanmigo (Khan Academy) | AI Tutor/Teaching Assistant | Designed to guide students to answers via multi-step, Socratic questioning rather than just providing the solution (simulating a complex teaching strategy). Teachers can also use it to autonomously draft rubrics, lesson plans, and differentiation strategies. |

| CrewAI (Open-Source Framework) | Multi-Agent Development | While requiring some technical knowledge, this open-source framework allows developers (or advanced students) to build a “crew” of collaborating agents (e.g., a “Researcher Agent,” a “Writer Agent,” and an “Editor Agent”) to autonomously complete complex, multi-step projects. |

| MindStudio / Build-Your-Own-Agent Platforms (Free Tiers) | Agent Builder/No-Code Automation | Platforms like MindStudio offer visual, low-code interfaces to design goal-oriented agents that can connect different APIs (like Google Sheets, email, or web searches) to automate multi-step workflows. |

| NotebookLM (Google – Free during early access) | Research Assistant | Acts as an intelligent agent over your documents (notes, readings, sources). It can be tasked with goals like “Create a comprehensive study guide from these five lecture transcripts” or “Find all conflicting evidence between Source A and Source B.” |

The “Cons”: The Unchecked Autonomous Threat

The same autonomy that makes agents so powerful is also the source of their most significant danger. The risk here is not just plagiarism (copy-paste), but outsourcing the entire act of learning to an autonomous system.

- The LMS Hacking Agent: The ultimate threat is a student-deployed AI agent capable of accessing a Learning Management System (LMS). An autonomous agent could be instructed with a goal: “Achieve an A in History 101.” The agent would then:

- Log in using the student’s credentials.

- Read all assigned materials.

- Participate in discussion forums.

- Take online quizzes and exams by accessing course content (open-book) or even using web tools to find answers.

- Submit essays generated by internal GenAI components.

In this scenario, the student receives an A grade without demonstrating any actual learning, and the university’s integrity systems would be challenged to detect the autonomous process rather than just the final output.

- Data Security and System Overload: Autonomous agents require deep access to institutional data (student records, course materials, administrative systems). If an agent goes “off-script” or is compromised, it could rapidly exfiltrate massive amounts of sensitive data or execute unintended, disruptive actions across interconnected systems.

- The Loss of Judgment and Critical Thinking: By delegating entire complex tasks to an agent, students forfeit the practice of planning, reasoning, and error correction. Education is about the process of problem-solving, not just the delivery of a correct answer. Over-reliance risks creating a generation of graduates who are expert prompters but poor thinkers.

Moving Forward: Reclaiming the Human in the Loop

The path forward is not to ban these technologies, but to govern their autonomy. We must insist on a Human-in-the-Loop model.

- Redefine Assessment: Design assessments that are resistant to agentic solutions. Focus on in-person, unproctored, or highly personalized tasks that require integration of local knowledge or real-world interaction (e.g., in-class debates, reflective journals on personalized field experiences, or oral examinations).

- Explicit Policy and Instruction: Educators must teach students how to use AI agents ethically as professional tools (like a calculator or a spreadsheet), not as autonomous surrogates for their own intellect.

- Prioritize Process Over Product: Require students to submit the agent’s full workflow/trace—the plan, the tools used, and the decisions made—alongside the final product. The steps taken by the agent become part of the grade.

- Secure the Perimeter: Institutions must invest heavily in security and access controls, ensuring that agent tools deployed by students or third parties cannot gain the necessary permissions to autonomously execute within the LMS or confidential university systems.

Agentic AI promises to take the drudgery out of research and administration, freeing educators to focus on true mentorship and high-level engagement. But this innovation comes at the cost of unprecedented risks to academic honesty. To protect the integrity of the degree, higher education must move quickly to put guardrails around the actions of AI agents, not just the words they generate.

Note: This blog post was written with the assistance of Gemini, an AI language model.

Leave a comment